What is Deep/Dark Web?

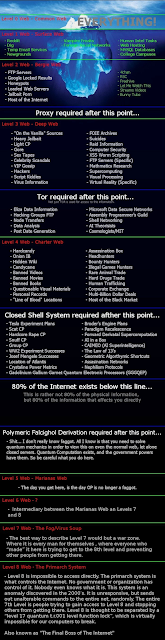

Deep Web is defined as parts of the World Wide Web whose contents are not indexed by standard search engines for any reason.

Because the HTTP servers of the Deep Web and/or Dark Web tells the Search Engines (including Google (company)) to buzz off with a suitable robots.txtfile (see also robots.txt) and/or by recognizing their Web Crawlers and denying them access (must be fun to maintain that Blacklist). Think of it as a version of Search Engine Optimization with a different optimization than one usually expects.

Google quite reasonably respects the crawling permission limits found in websites' robots.txt files, per the relevant Web Standards.

The deep web is the unidex part of the web

And the Dark Web is just the small part this deep web.

In 2001 the term deep web was introduced by the computer scientist named Micheal Bergman.

He uses this term to explain the hidden part of the web which is hidden behind the Html forms and paywall.

The term, “Deep Web,” was coined in 2001 by BrightPlanet, an Internet search technology company that specializes in searching deep Web content.

Military origins of Deep Web – Like other areas of the Internet, the Deep Web began to grow with help from the U.S. military, which sought a way to communicate with intelligence assets and Americans stationed abroad without being detected. Paul Syverson, David Goldschlag and Michael Reed, mathematicians at the Naval Research Laboratory, began working on the concept of “onion routing” in 1995. Their research soon developed into The Onion Router project, better known as Tor, in 1997.

The U.S. Navy released the Tor code to the public in 2004, and in 2006 a group of developers formed the Tor Project and released the service currently in use. The content of the deep web can be assessed only with the help of the direct URL or ip address.

The websites of Dark web belongs to the .onion domain extension. As you are familiar with the .com, .net. .in. The dark web content use .onion sites.

On these places, you can access vendors and virtual markets that allow an individual to barter goods for goods, stolen or legit, or the sale and procure of drugs, weapons, and other illegal possessions.

https://danielmiessler.com/study/internet-deep-dark-web/

In the year 2000, Michale Bergman said how searching on the internet can be compared to dragging a net across the surface of the ocean: a great deal may be caught in the net, but there is a wealth of information that is deep and therefore missed. Most of the web's information is buried far down on sites, and standard search engines do not find it. Traditional search engines cannot see or retrieve content in the deep web. The portion of the web that is indexed by standard search engines is known as the surface web. As of 2001, the deep web was several orders of magnitude larger than the surface web. An analogy of an iceberg has been used to represent the division between surface web and deep web respectively.

Non-indexed content

Bergman, in a seminal paper on the deep Web published in The Journal of Electronic Publishing, mentioned that Jill Ellsworth used the term invisible Webin 1994 to refer to websites that were not registered with any search engine. Bergman cited a January 1996 article by Frank Garcia:

It would be a site that's possibly reasonably designed, but they didn't bother to register it with any of the search engines. So, no one can find them! You're hidden. I call that the invisible Web.

Another early use of the term Invisible Web was by Bruce Mount and Matthew B. Koll of Personal Library Software, in a description of the @1 deep Web tool found in a December 1996 press release.

The first use of the specific term Deep Web, now generally accepted, occurred in the aforementioned 2001 Bergman study.

Content types

Methods which prevent web pages from being indexed by traditional search engines may be categorized as one or more of the following:

Dynamic content: dynamic pages which are returned in response to a submitted query or accessed only through a form, especially if open-domain input elements (such as text fields) are used; such fields are hard to navigate without domain knowledge.

Unlinked content: pages which are not linked to by other pages, which may prevent web crawling programs from accessing the content. This content is referred to as pages without backlinks (also known as in links). Also, search engines do not always detect all backlinks from searched web pages.

Private Web: sites that require registration and login (password-protected resources).

Contextual Web: pages with content varying for different access contexts (e.g., ranges of client IP addresses or previous navigation sequence).

Limited access content: sites that limit access to their pages in a technical way (e.g., using the Robots Exclusion Standard or CAPTCHAs, or no-store directive which prohibit search engines from browsing them and creating cached copies).

Scripted content: pages that are only accessible through links produced by JavaScript as well as content dynamically downloaded from Web servers via Flash or Ajax solutions.

Non-HTML/text content: textual content encoded in multimedia (image or video) files or specific file formats not handled by search engines.

Software: certain content is intentionally hidden from the regular internet, accessible only with special software, such as Tor, I2P, or other darknet software. For example, Tor allows users to access websites using the .onion host suffix anonymously, hiding their IP address.

Web archives: Web archival services such as the Wayback Machine enable users to see archived versions of web pages across time, including websites which have become inaccessible, and are not indexed by search engines such as Google.

- https://web.archive.org/web/20120422070406/http://www.theepochtimes.com/n2/world/wikileaks-intercepted-private-communications-maintains-access-47274.html

- https://www.reddit.com/r/nosleep/comments/1hfnxg/journey_to_the_dark_web/

- https://www.torproject.org/docs/bridges

- https://www.scribd.com/doc/274571331/Privacy-and-Anonymity-Techniques-Today

- https://motherboard.vice.com/en_us/article/d73yd7/how-the-nsa-targets-tor-users

- http://www.straightdope.com/columns/read/3092/how-can-i-access-the-deep-dark-web/

- https://en.wikipedia.org/wiki/Silk_Road_(marketplace)

- https://thetinhat.com/tutorials/darknets/i2p.html

- https://cyberspacemysteries.blogspot.com/

- https://www.techadvisor.co.uk/how-to/internet/dark-web-3593569/

- https://www.propublica.org/nerds/a-more-secure-and-anonymous-propublica-using-tor-hidden-services

- https://darkwebnews.com/deep-web/#ftoc-how-to-access-the-deep-web

- https://www.whoishostingthis.com/blog/2017/03/07/tor-deep-web/

- https://darkwebacademy.com/

- https://en.wikipedia.org/wiki/.onion

- https://www.deepweb-sites.com/

TOR(The Onion Router)

What Is Onion Sites?

Example of dark webs are: TOR, I2P, FreeNet

- http://thehiddenwiki.org/

- https://thehiddenwiki.org/

- http://the-hidden-wiki.com/

- http://torhiddenwiki.com/

- https://ahmia.fi/

- http://www.thehiddenwiki.net/tor-links-directory/services/

- https://tails.boum.org/

- https://en.wikipedia.org/wiki/List_of_Tor_hidden_services

- https://www.reddit.com/r/onions/

- https://freenetproject.org/

- https://geti2p.net/en/

- https://www.reddit.com/r/deepweb/

- https://www.deepwebsiteslinks.com/

- https://www.deepdotweb.com/

- https://darknetmarkets.co/

- https://onion.to/

- https://dreammarketdrugs.com/top-10-most-popular-darknet-marketplaces/

- https://www.zeronet.io/

- https://hackercombat.com/the-best-10-deep-web-search-engines-of-2017/

- https://maidsafe.net/

- https://tails.boum.org/

- http://7g5bqm7htspqauum.onion/ Hidden Wiki - Tor Wiki Hidden Service link collection

- http://zgrl6sghf5jh37zz.onion/ Hidden Wiki - Onion Urls, Deep Web Links

- http://xqrqbzhii6m6sdrv.onion/ OnionDir - Deep Web Link Directory

- http://udsmewv45lunzoo4.onion/ TorLinks - Onion Links List Hidden Wiki mirror

- http://kpvz7kpmcmne52qf.onion/wiki/index.php/Main_Page

Example of Deep web link

http://am4wuhz3zifexz5u.onion

zqktlwi4fecvo6ri.onion

https://en.wikipedia.org/wiki/List_of_Internet_top-level_domains#Special-Use_Domains

One can open some deep web links only by appending ".to" after the original website address in regular browser.

For e.g. - http://kpvz7ki2v5agwt35.onion/wiki/index.php/Main_Page

becomes http://kpvz7ki2v5agwt35.onion.to/wiki/index.php/Main_Page

Youtube: https://youtu.be/7G1LjQSYM5Q

The dark web Marketplace Generate 500,000,000 $ per day …

Expectations say that the deep Web consists of about 7,500 terabytes...

Safety precautions:

Summary

The Internet is where it’s easy to find things online because what you’re searching for is all in search engines.

The Deep Web is the part of the Internet that isn’t necessarily malicious, but is simply too large and/or obscure to be indexed due to the limitations of crawling and indexing software (like Google/Bing/Baidu).

The Dark Web is the part of the non-indexed part of the Internet (the Deep Web) that is used by those who are purposely trying to control access because they have a strong desire for privacy, or because what they’re doing is illegal.

References:

Safety precautions:

- Don't trust anyone out there in the deep web.

- COVER your webcam using tape.

- Never download any files or software from deep web.

- If you want some extra protection (or maybe) , type "about:config" in the address bar, scroll down to "Javascript_enabled" and change the value from "true" to "false"

- Don't use Utorrent or any other torrenting services while surfing on the deep web.

- Install VMware workstation in your PC/Laptop.

- Download Kali linux [Supported version for your system configurations].

- Install Kali linux as your host OS.

- Once you done with installation, Logon to you Kali OS and create a new user.

- Logon to new user account and download Tor browser for Kali linux. after downloading install the browser and connect to Internet.

- Once Tor broswer is opened, then on the homepage you will a tor search option.

- Search for "The hidden wiki"[Collection onion website,etc.,].

- You will get some crazy link, you will wonder after seeing the link.

- Once if you access the link. You will get some awesome website links there.

- Start browsing and Have fun[Facebook in recent years started their dark web version, you will also have Blackbook as an alternative, you will have some mail providing website. etc.,]

Summary

The Internet is where it’s easy to find things online because what you’re searching for is all in search engines.

The Deep Web is the part of the Internet that isn’t necessarily malicious, but is simply too large and/or obscure to be indexed due to the limitations of crawling and indexing software (like Google/Bing/Baidu).

The Dark Web is the part of the non-indexed part of the Internet (the Deep Web) that is used by those who are purposely trying to control access because they have a strong desire for privacy, or because what they’re doing is illegal.

References:

- https://www.hackersdenabi.net/deep-web-and-dark-web/

- https://www.amazon.com/Tor-Dark-Net-Remain-Online-ebook/dp/B01D1SF82W/

- https://www.nsa.gov/what-we-do/research/ia-research/index.shtml

- https://www.eecs.yorku.ca/course_archive/2016-17/W/3482/Team4_DeepDarkWeb.pdf

- http://www.visualcapitalist.com/dark-web/

- https://teqoflucky.wordpress.com/the-dark-web/

- https://www.theexplode.com/how-to-access-dark-web/

- https://www.digitalfox.media/tech-rhino/the-darkweb-series-part-1/

- https://www.wired.com/1993/02/crypto-rebels/

3 comments:

Positive site, where did u come up with the information on this posting? I'm pleased I discovered it though, ill be checking back soon to find out what additional posts you include. deep web

Pretty good post. I just stumbled upon your blog and wanted to say that I have really enjoyed reading your blog posts. Any way I'll be subscribing to your feed and I hope you post again soon. Big thanks for the useful info. deep web

Took me time to read all the comments, but I really enjoyed the article. It proved to be Very helpful to me and I am sure to all the commenters here! It’s always nice when you can not only be informed, but also entertained! dark web

Post a Comment